General Description:

Fast dual proximal gradient method for solving problems of the form

Assumptions:

Available Oracles:

(the function values of f,g are not required in the economical setting)

Usage:

out = fdpg(Ffun,F_grad_conj,Gfun,Gfun_prox,Afun,Atfun,lambda,starty,[par]) [out,fmin] = fdpg(Ffun,F_grad_conj,Gfun,Gfun_prox,Afun,Atfun,lambda,starty,[par]) [out,fmin,parout] = fdpg(Ffun,F_grad_conj,Gfun,Gfun_prox,Afun,Atfun,lambda,starty,[par]) Input:Ffun - function handle for f (if f is extended real-valued, it is enough to specify the operation of f on its domain) F_grad_conj - function handle for the gradient of the conjugate of f (for a given w, the oracle outputs argmax{<x,w>-f(x)})

Gfun - function handle for g (if g is extended real-valued, it is enough to specify the operation of g on its domain)

Gfun_prox - function handle for the proximal mapping of g times a positive constant

Afun - function handle for the linear transformation A

Atfun - function handle for the adjoint of the linear transformation A

lambda - positive constant

starty - starting vector of dual variables (resides in the image space of A) par - struct containing different values required for the operation of fdpg Output:out - optimal solution (up to a tolerance) fmin - optimal value (up to a tolerance) parout - a struct containing containing additional information related to the convergence. The fields of parout are: iterNum - number of performed iterations

funValVec - vector of all function values generated by the method Method Description:

Employs the fast dual proximal gradient method whose general update step is

where Lk is chosen either as a constant or by a backtracking procedure (default). The last computed vector uk is considered as the obtained solution and the last computed yk is the obtained dual vector.

References:

Consider the problem of projecting a vector d on a polytope:

where d,A and b are generated as follows.

>> randn('seed',314);>> A=randn(20,30);>> b=randn(20,1);>> d=randn(30,1);To solve the problem, we will use the FDPG method with f, g and lambda are taken as

Note that since g is an indicator, lambda could have been chosen as any positive constant.

We can now run fdpg (the initial vector is of the same dimension as the image space of A)

*********************fdpg*********************#iter fun. val. feas. viol. L val. 1 0.000000 12.8042 64.000000 2 0.657062 6.46853 64.000000 3 1.707604 2.85988 64.000000 4 2.479346 1.20314 64.000000 : : : : 74 2.754022 8.82372e-05 64.000000 75 2.754018 0.000152388 64.000000 76 2.754017 0.00019299 64.000000 77 2.754018 0.000207248 64.000000Stopping because the norm of the difference between consecutive iterates is too small----------------------------------Optimal value = 2.754018 Note that the Gfun_prox input was computed by the formula min(x,b) since

The formula for the F_grad_conj is a result of the following computation:

The constraint violation of the obtained solution can be computed by

>> norm(max(A*out-b,0))ans = 2.072481037126269e-04which is obviously the same as

>> parout.feasVal(end)ans = 2.072481037126269e-04The maximal amount of iterations by default is 1000, but the algorithm stopped after 77 iterations since the difference between consecutive iterates was too small. To enforce the method to run for more than 77 iterations, we can change the parameter eps that controls the upper bound on the distance between consecutive iterates (whose default is 1e-5)

>> clear par>> par.eps=1e-8;>> [out,fmin,parout]=fdpg(@(x)0.5*norm(x-d)^2,@(x)x+d,@(x)0,@(x,a)min(x,b),@(x)A*x,@(x)A'*x,1,zeros(20,1),par);*********************fdpg*********************#iter fun. val. feas. viol. L val. 1 0.000000 12.8042 64.000000 2 0.657062 6.46853 64.000000 3 1.707604 2.85988e 64.000000 4 2.479346 1.20314 64.000000 5 2.822158 0.590423 64.000000 6 2.903982 0.283675 64.000000 7 2.879172 0.141183 64.000000 8 2.835106 0.0841442 64.000000 : : : :

148 2.754038 7.87737e-07 64.000000 149 2.754038 1.12641e-06 64.000000 150 2.754038 1.29568e-06 64.000000 151 2.754038 1.29757e-06 64.000000Stopping because the norm of the difference between consecutive iterates is too small----------------------------------Optimal value = 2.754038 Obviously, more iterations were performed under the new tolerance parameter. Note also that although the obtained function value is slightly higher than the one obtained with eps=1e-5, the feasibility violation of the obtained solution is significantly better.

Example 2:

Consider the following denoising problem:

where y is a noisy step function generated as follows.

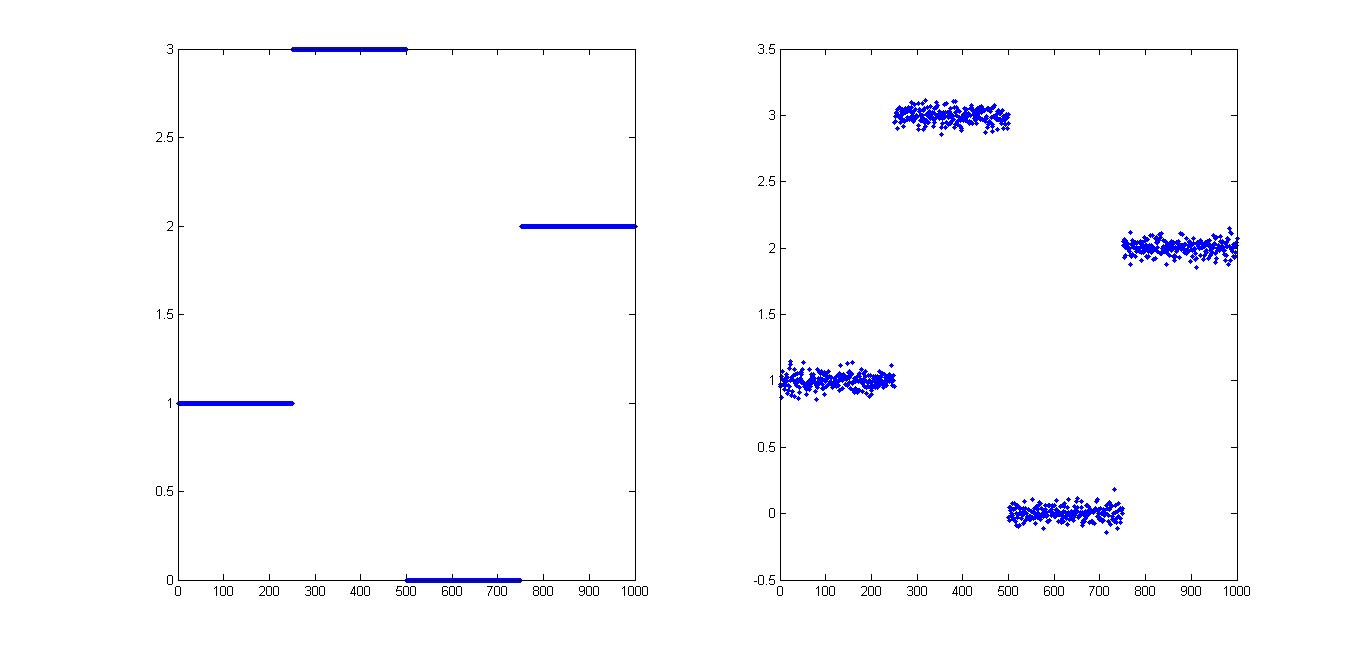

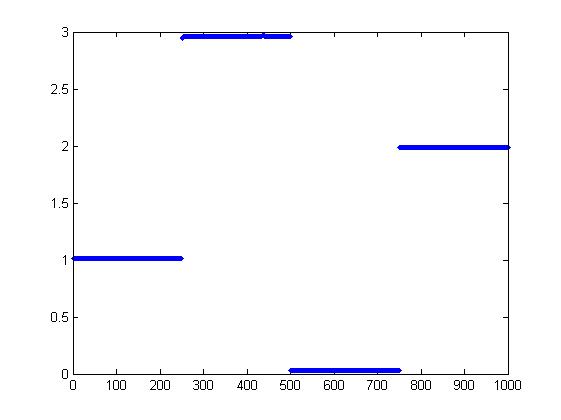

>> randn('seed',314);>> x=zeros(1000,1);>> x(1:250)=1;>> x(251:500)=3;>> x(751:1000)=2;>> y=x+0.05*randn(size(x));The "true" and noisy signals can be plotted.

where

and A given by

>> A=sparse(999,1000);>> for i=1:999>> A(i,i)=1;>> A(i,i+1)=-1;>> endWe can now solve the problem using fdpg

>> [out,fmin,parout] = fdpg(@(x)0.5*norm(x-y)^2,@(x)x+y,@(x)norm(x,1),@(x,a)prox_l1(x,a),@(x)A*x,@(x)A'*x,4,zeros(999,1));*********************fdpg*********************#iter fun. val. feas. viol. L val. 1 248.511179 3.1607e-07 4.000000 2 107.310785 3.1607e-07 4.000000 3 74.301824 3.1607e-07 4.000000 4 60.396805 3.1607e-07 4.000000 5 53.851067 3.1607e-07 4.000000 996 28.915146 3.15595e-07 4.000000 997 28.909778 3.15432e-07 4.000000 998 28.904498 3.14599e-07 4.000000 999 28.899496 3.13701e-07 4.000000 1000 28.895505 3.13267e-07 4.000000----------------------------------Optimal value = 28.895505 Since the function g is real-valued, it is better to invoke the function with real_valued_flag set to true, since in this case there is no need to check for feasibility violation and the algorithm outputs the iterate with the smallest function value.

>> clear par>> par.real_valued_flag=true;>> [out,fmin,parout] = fdpg(@(x)0.5*norm(x-y)^2,@(x)x+y,@(x)norm(x,1),@(x,a)prox_l1(x,a),@(x)A*x,@(x)A'*x,4,zeros(999,1),par);*********************fdpg*********************#iter fun. val. L val. 2 107.310785 4.000000 3 74.301824 4.000000 4 60.396805 4.000000 5 53.851067 4.000000 6 50.175863 4.000000 7 48.060216 4.000000 8 46.621121 4.000000 9 45.632373 4.000000 10 45.103918 4.000000 11 44.149710 4.000000 15 43.972166 4.000000 : : :

496 28.942826 4.000000 497 28.926443 4.000000 498 28.910757 4.000000 499 28.899488 4.000000 500 28.892469 4.000000----------------------------------Optimal value = 28.892469 The last recorded iteration is 500 since there was no improvement of function value following that iteration (although 1000 iterations were employed). Note also that a slightly smaller function value was obtained in this case. The obtained solution is an excellent reconstruction of the original signal.

Example 3:

Consider the problem

where

and

The matrices A,B,C,D are generated as follows.

>> randn('seed',314);>> rand('seed',314);>> A=randn(20,30);>> B=randn(40,50);>> C=1+rand(30,40);>> D=randn(30,40);To solve the problem, we will use the FDPG method with lambda=1,

and the linear transformation being

The command invoking fdpg is >> E = 1./(C.^2);>> clear par>> par.real_valued_flag=true;>> [out,fmin,parout] = fdpg(@(X)0.5*norm(C.*(X-D),'fro')^2,@(X)E.*X+D,...@(X)norm(X,'fro'),@(x,a)prox_Euclidean_norm(x,a),@(X)A*X*B,@(X)A'*X*B',1,zeros(20,50),par); *********************fdpg*********************#iter fun. val. L val. 2 693.740808 4096.000000 3 623.515605 4096.000000 6 553.714050 8192.000000 : : :

279 485.921545 8192.000000 280 485.921545 8192.000000 281 485.921545 8192.000000 282 485.921545 8192.000000 283 485.921545 8192.000000Stopping because the norm of the difference between consecutive iterates is too small----------------------------------Optimal value = 485.921545 |

Solvers >